Return to Sender

This story shows how the political impact of electronic communication is a global phenomenon that impacts institutions of governance and civic discourse.

Labels: e-mail etiquette

A blog about digital rhetoric that asks the burning questions about electronic bureaucracy and institutional subversion on the Internet.

Labels: e-mail etiquette

Labels: book reviews

Labels: book reviews

Labels: digital parenting, social networking

Labels: Google

Labels: e-mail etiquette, science

Labels: blogging, government websites

Labels: personal life

Labels: consumerism, religion, search engines

Labels: government websites

The news about pending legislation in France to require Apple iTunes proprietary technology to be uncoupled from the company's iPod player may remind some of last year's story about French resistance to the Google Print electronic archive initiative. In both cases, accusations were made that American cultural hegemony was being furthered at the same time that corporate technological monopolies were being strengthened.

The news about pending legislation in France to require Apple iTunes proprietary technology to be uncoupled from the company's iPod player may remind some of last year's story about French resistance to the Google Print electronic archive initiative. In both cases, accusations were made that American cultural hegemony was being furthered at the same time that corporate technological monopolies were being strengthened.

Labels: composition, distance learning, military

Labels: database aesthetics, Iraq war

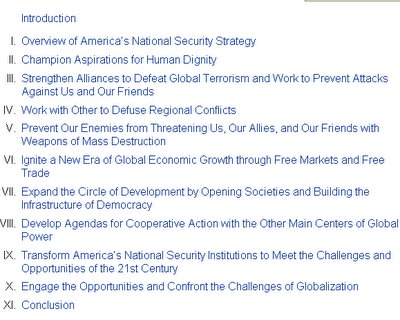

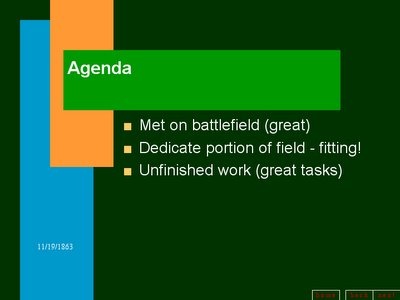

Labels: Iraq war, powerpoint politics, White House

Labels: higher education, Middle East

Labels: government reports, justice system, White House

With so much mainstream press about asocial video games, it's refreshing to see that this month Water Cooler Games covers two games aimed at enacting nonviolent resolutions to incendiary political conflicts.

With so much mainstream press about asocial video games, it's refreshing to see that this month Water Cooler Games covers two games aimed at enacting nonviolent resolutions to incendiary political conflicts.Labels: serious games, sports

Labels: urbanism

Labels: urbanism

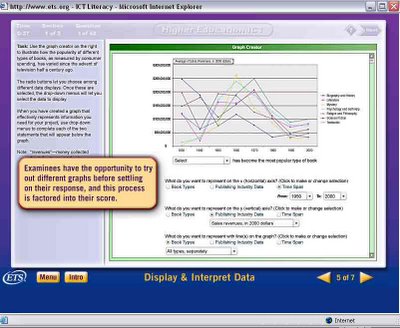

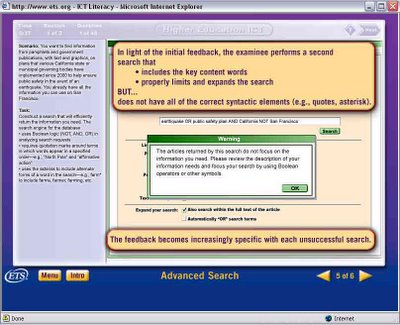

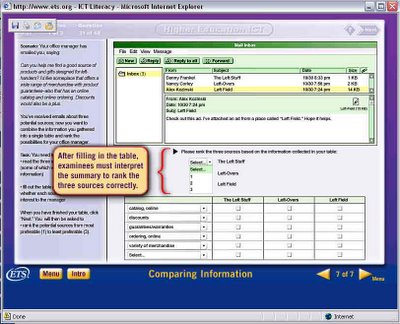

From my information literacy perspective, I would say that Project SAILS is developing a much better multiple-choice tool, which is actually being facilitated by university librarians. Yet I don't think any such limited format could ever adequately model the complexities of real searches and forays into electronic communication. There are too many correct paths to an information literacy goal and too many wrong paths as well.

From my information literacy perspective, I would say that Project SAILS is developing a much better multiple-choice tool, which is actually being facilitated by university librarians. Yet I don't think any such limited format could ever adequately model the complexities of real searches and forays into electronic communication. There are too many correct paths to an information literacy goal and too many wrong paths as well.Labels: hoaxes, information literacy, teaching

Labels: Microsoft

Labels: Google, government websites

Today is Blog against Sexism Day, in honor of International Women's Day, which initially commemorated the labor, hardships, and unionization efforts of female textile workers who earned subsistence livings under terrible conditions. You can also honor the day with an e-card to send socialist, feminist greetings to that special woman in your life. The official International Women's Day website now has a corporate sponsor, HSBC. You don't suppose they fund any sweatshops, do you?

Today is Blog against Sexism Day, in honor of International Women's Day, which initially commemorated the labor, hardships, and unionization efforts of female textile workers who earned subsistence livings under terrible conditions. You can also honor the day with an e-card to send socialist, feminist greetings to that special woman in your life. The official International Women's Day website now has a corporate sponsor, HSBC. You don't suppose they fund any sweatshops, do you?Labels: blogging, White House

Labels: religion

Labels: medicine, personal life, UCLA

Labels: print media, USC

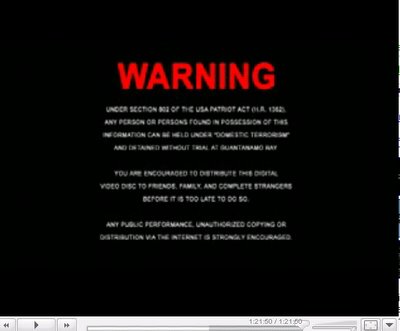

Labels: hoaxes

Labels: Iraq war, White House

Labels: digital archives

Labels: Iraq war, serious games, USC

Labels: distance learning, USC